Let’s examine some hard data on the impact a VPC has on cold-start in a serverless architecture with AWS Lambda.

This is the second post detailing an investigation into cold-start in AWS Lambda. Check out my previous post for some baseline numbers on the impact cold-start can have on simple Lambda functions. That post will also give some context for what I’m going to discuss here.

So far, we’ve seen that a cold-start adds about 300ms to invocation time. Also, more memory does not improve overall invocation time, though it makes the execution phase faster.

A Virtual Private Cloud (such as AWS VPC) is a common networking tool and something we use quite extensively for our instances and containers. However, serverless functions may very well require a shift in the way we think of VPCs.

What’s in a VPC?

A VPC can be used to ensure private resources (such as databases) are not accessible from the public internet. To be clear – this pattern is not going away anytime soon, even in a serverless world.

However, given VPCs are such common tools, they’ve always been used quite liberally: most of the time, the default stance is to put everything inside a VPC, just in case. This approach doesn’t work well in a serverless environment, for reasons that we’ll soon be able to see.

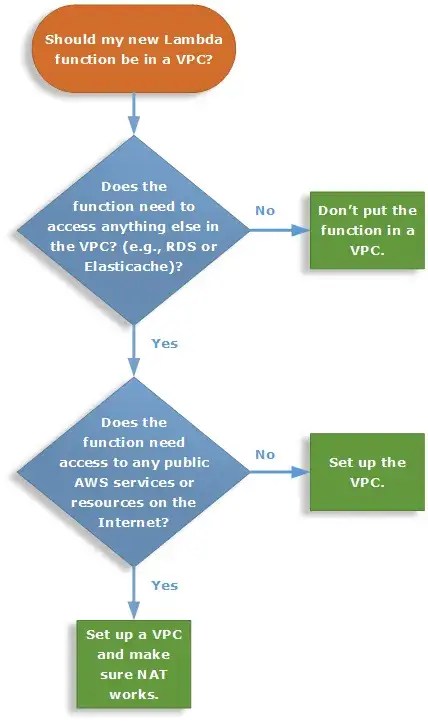

Unsurprisingly, the guidance AWS provide is pretty clear in stating that a function should only be in a VPC if it needs access to resources that are in a VPC. Otherwise, it should be public and rely on IAM for security. The following diagram comes straight from the AWS documentation on Lambda best practices:

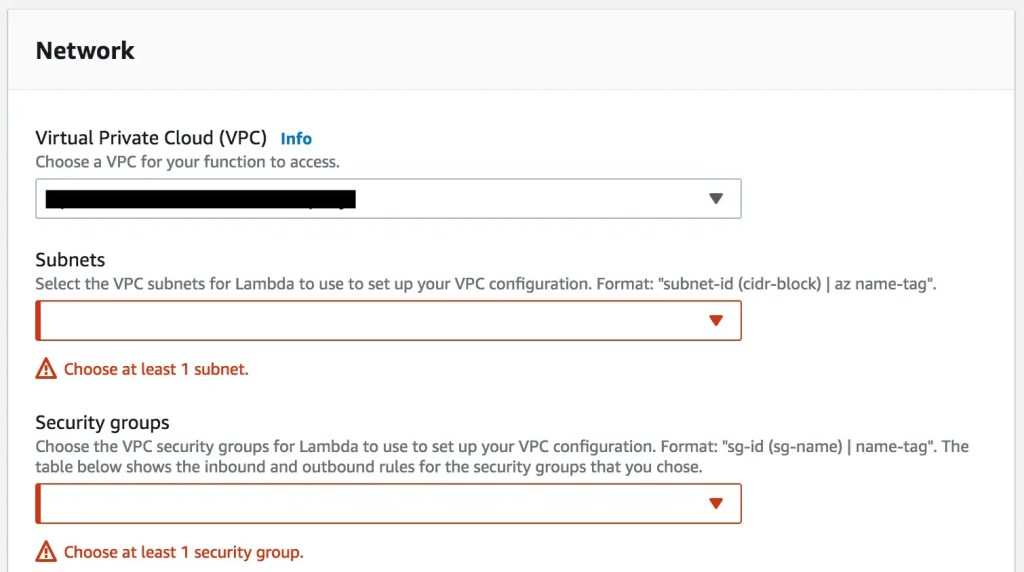

VPCs are not gone, however: the only way for a Lambda function to access a resource inside a VPC is to be part of the VPC itself. This means configuring the function with VPC subnet IDs and security group IDs. This can be done through the AWS Console by editing Networking details for the function or, more sensibly, using something such as the Serverless Framework. You can check out the serverless.yml file I used for this investigation to see how individual functions can be configured with a VPC.

When a Lambda function runs in a VPC, elastic network interfaces (ENIs) have to be provisioned to allow access to other resources. The word on the grapevine is that this has a huge impact on cold-start time and should be avoided whenever possible. This is what I set out to investigate.

The experiment

The setup for the experiment is the same as in my previous post: I deployed a bunch of Lambda functions with different memory configurations and I replicated the setup inside and outside a VPC. Then I invoked the functions hundreds of times, making sure I’d trigger a cold-start every time. Finally, I used X-Ray to measure the total time taken by the invocation and more detailed times for 3 phases: Setup, Initialization and Execution.

Results

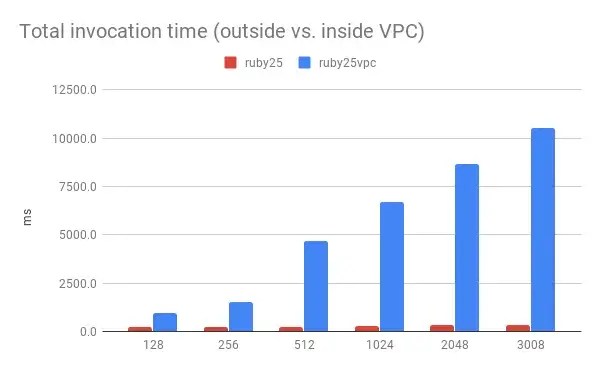

Looking at the average invocation time, it’s pretty clear that a VPC incurs a significant penalty on cold-start.

Functions outside a VPC (marked as ruby25 in the chart above) have slower cold starts the more memory they’re allocated, which is consistent with my previous findings. However, that slight increase is almost invisible next to the massive increase inside a VPC (marked as ruby25vpc above, as you probably guessed).

On average, for a function configured with 128Mb, cold-start is about 4 times slower inside a VPC. With 3Gb, it’s about 30 times slower.

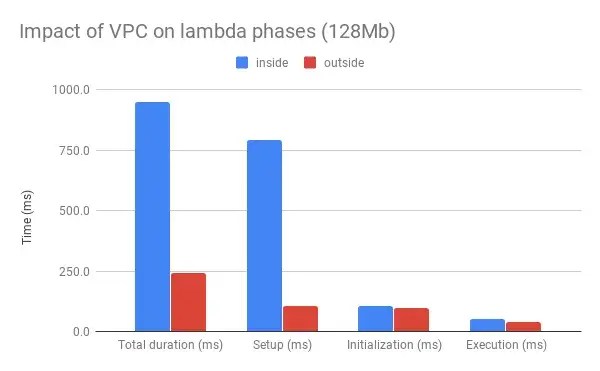

If we dig a little deeper into the various phases that make up a Lambda invocation, we can see that the biggest difference is in the Setup phase. My assumption is that the ENIs are provisioned during this phase, which can sometimes take a long time.

While I’ve only included the 128Mb version above, the same pattern applies across all memory configurations.

Time distribution

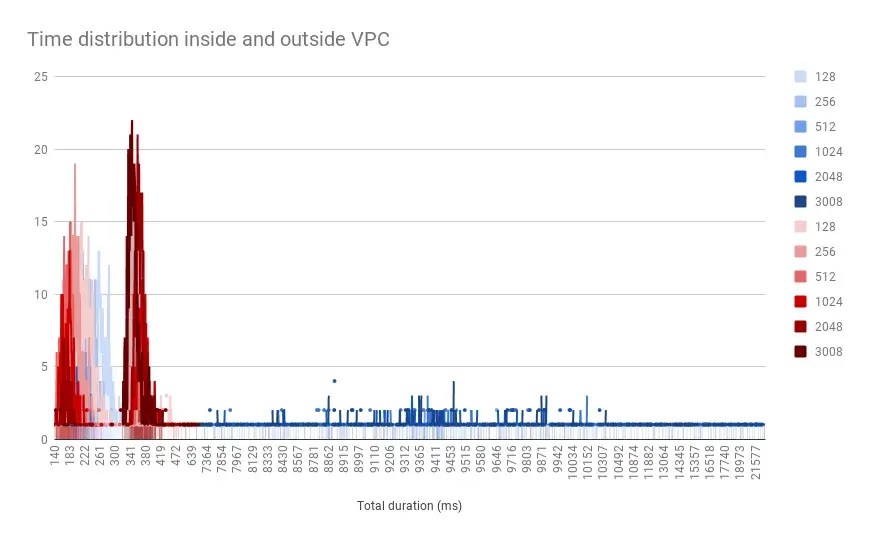

As the average is a pretty rough statistical tool, I’ve also looked at the data from a different angle: time distribution.

The chart above shows how many executions clocked around a given time and it gives us a few insights that the average had smothered away. As before, blue is inside a VPC ad red is outside.

The first thing we can see is that invocation times for functions outside of a VPC form two peaks in the chart, roughly around 200 and 380ms. This double-peak pattern is pretty consistent across memory configurations. I’d be curious to know the reason behind such a strong clustering effect. Was it random chance? The effect of some hidden cache? An effect of the global load on AWS at the time or running the experiment? I don’t have enough data to even make a guess here: reach out in the comments if you think you know more.

The second insight from the chart is that invocations inside a VPC have a very different distribution: there’s still a peak centred around 250ms, but there is also a very long tail, with some invocations taking 20 or even 30 seconds. Roughly 50% of the invocations fall within the tail, which contributes to the high average we’ve seen earlier.

In practice, this means that we can be reasonably sure that cold-start for a Lambda invocation outside of a VPC will not be more than 400ms. When it comes to running inside a VPC, however, cold-start could be 300ms, but it could be just as likely 1 second, or 5 or 20: there’s no way to predict it.

Conclusions

This leads us to a few interesting conclusions:

- Lambda functions inside a VPC have, on average, a longer cold-start time (4x to 30x in my observations)

- The penalty gets worse the more memory the function is configured with

- It’s much harder to predict the duration of an invocation inside a VPC, as the range is much wider

Note that Lambdas doing real work will have a higher execution time than my trivial handlers: adding more memory will benefit real Lambdas by reducing their execution time, while cold-start time will remain the same. The VPC penalty will probably still be felt, however.

Also note that these conclusions only apply to Ruby and NodeJS. If you’re using a different runtime, especially one based on a compiled language, your mileage may vary.

Open questions

As with anything in life, diving deep into a subject is bound to raise more questions than answers. Here are a few avenues of inquiry for the future, based on my experience with this experiment:

- Testing again with realistic code in the handler to see if there is an inflection point when the benefits to execution time are so great that the VPC penalty becomes negligible

- Comparing cold-start measures with warm-starts, to see whether there’s a change in distribution, in addition to the change in average we expect

References

Ready to start your career at Simply Business?

Want to know more about what it’s like to work in tech at Simply Business? Read about our approach to tech, then check out our current vacancies.